Share

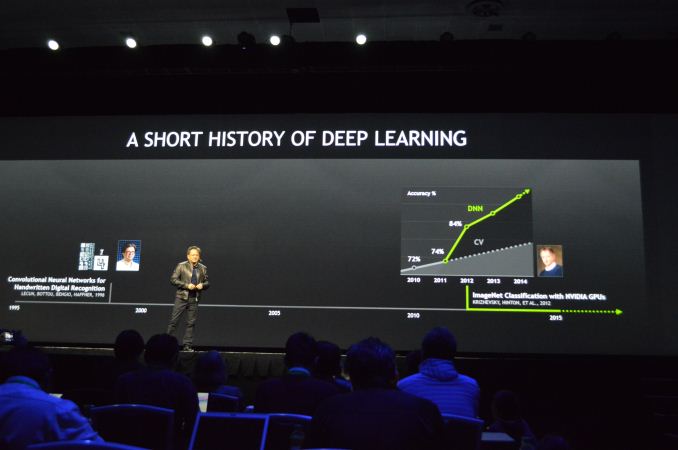

The GPU Technology Conference has begun with a BANG—a "Big Bang," as NVIDIA's cofounder Jen-Hsun Huang told a packed audience at the opening day keynote. And this year's theme is all about using NVIDIA's GPU silicon for what they call "deep learning"—something I knew very little about until today. It turns out that deep learning is pretty cool and is all about teaching computers to see and think, enabling everything from image recognition (think the magic behind Google image search) to autonomous cars and robotics.

Of course this wouldn't be a GPU technology conference without the release of a new chip and NVIDIA did not disappoint, announcing their new GeForce GTX TITAN X with accompanying room-rocking soundtrack and stunning HD visuals. You've got to hand it to these guys—they know how to take a boring hunk of silicon and give it a huge, sexy debut with fanfare and excitement. If my friend Doug MacMillan is reading this, I'm expecting on-stage fireworks next year!

Now back to the deep learning... This is how I understand it (you can get more technical information here): Researchers around the world use GPU technology to build neural networks, essentially training computers to think. The example that sticks with me was presented by Andrej Karpathy from Stanford University. He showed how a computer can look at an image and then write a sentence describing it.

I'll pause here to let that sink in.

From 45 days to a few

No human involved: the computer describes the image that it sees. The image processing part was kind of obvious to me; take an image, run it through a GPU—detect edges, colors, patterns and so on. But it turns out this is not the most processor-intensive part of the research. Where it gets really heavy is in the training—giving the computer enough data (thousands and thousands of photos) so it can learn. The examples were impressive. An image of a jetliner became "an aeroplane sitting on a runway." Another image showed a photo from behind some guy driving a horse-drawn cart. My non-GPU-enabled brain saw a big black hat: the computer wrote "man driving a horse-drawn cart down the street." Apparently what takes about 45 days to train a traditional computer now takes just a few days on one NVIDIA chip.

As with any "training," it takes a while to achieve the goal of 100% accuracy, and maybe it’s just my sense of humor that I only snapped photos of the images where the computer got it wrong (see below), but the technology is advancing at an incredible rate.

A couple of years ago, Microsoft actually demonstrated for the first time how a computer could identify images with more accuracy than a human. This is the "Big Bang" moment that Huang was referring to. The Microsoft-programmed computer was not only able to identify cats from dogs, cars from buses, but different breeds of dogs. And it did all of this more accurately than a very smart human researcher.

I wish I owned some Teslas

So where does all this deep learning take us? Well, Huang sees another “big bang” moment coming in the very near future with self-driving cars. He even brought Elon Musk—CEO of Tesla—on stage to talk about it. (I'm thinking Elan would come talk at my event if I owned three Tesla vehicles like Huang…) But wait—there's more. How about training a computer so it not only sees what is right in front of it (the obvious), but can see the future and predict what will happen—all based on big data? Yes, we’re already doing some of this with analytics—predictive maintenance for industrial and military companies as part of our Industrial Internet initiative, for example. But all of this could be taken to a whole new level with deep learning and GPU technology.

I think I have found my new buzzword for 2015 in "deep learning." Watch this space for more on how GE and NVIDIA enable all this deep learning stuff. I know a few things for sure: I cannot predict the various applications deep learning will get us into—there will be some surprises; NVIDIA and the whole community of engineers and data scientists working this field will blow us away with computer learning in the years to come.

For a snapshot of the keynote, and a lot more information than I included here, check out this NVIDIA blog post.